Contract-First API Development: The Spec as Executable Truth

A deep dive into contract-first API development with OpenAPI and Python. Learn to treat your API specification as an executable contract that validates requests, generates clients, and catches breaking changes before they ship.

The API documentation said the endpoint returned created_at as an ISO timestamp. The server returned a Unix epoch integer. The mobile team built their date parser around the docs. Three weeks of work, shipped to production, crashed on every API call.

“But the docs were updated last month,” someone said in the postmortem.

They were. The code was updated two months ago.

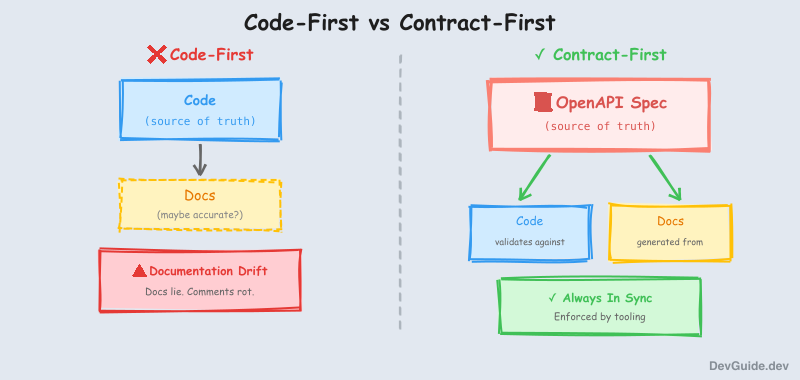

This is the fundamental problem with treating API documentation as a separate artifact from your code. Documentation drifts. Comments lie. Wikis become graveyards. The only source of truth is the running code - and by the time you discover a mismatch, you’ve already shipped broken integrations.

Contract-first API development inverts this. You write the specification first, then generate and validate everything from it. The spec isn’t documentation - it’s an executable contract that your code must obey.

What “Contract-First” Actually Means

In code-first development, you write your handlers, maybe add some decorators, and generate documentation as an afterthought. The code is the source of truth; the docs are a reflection that may or may not be accurate.

Contract-first flips this:

- Design the API specification before writing any implementation code

- Validate requests and responses against the spec at runtime

- Generate code (server stubs, client SDKs) from the spec

- Enforce the contract in CI - reject changes that violate it

The specification becomes the single source of truth. Your code must conform to it, not the other way around.

This isn’t just a documentation strategy. It’s a fundamentally different way of thinking about API development - one where the interface contract is a first-class engineering artifact, not a byproduct.

The Task Tracker API

Let’s build something real. We’ll create a task tracker API with four endpoints:

| Method | Path | Description |

|---|---|---|

POST | /tasks | Create a new task |

GET | /tasks | List all tasks (with filtering) |

GET | /tasks/{task_id} | Get a specific task |

PATCH | /tasks/{task_id} | Update a task |

Simple enough to understand, complex enough to show real patterns: request validation, query parameters, path parameters, error responses, and partial updates.

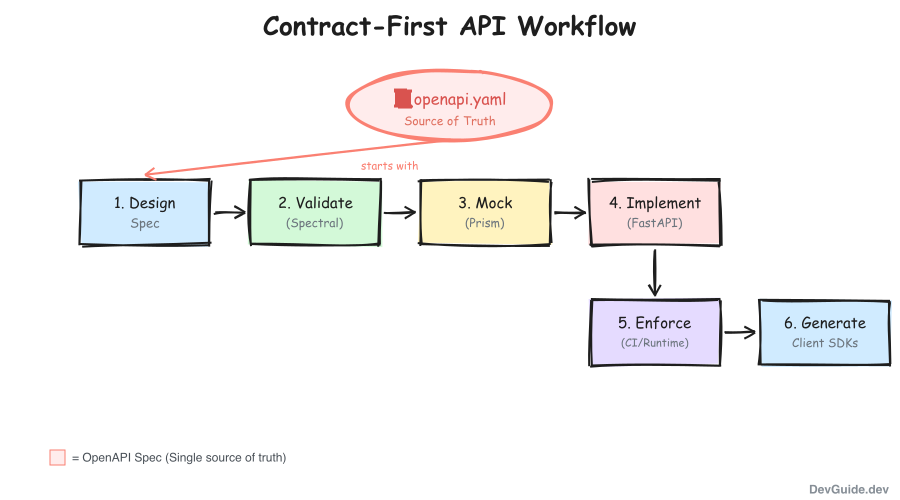

Step 1: Write the Specification First

Before writing a single line of Python, we define the contract. This is the complete OpenAPI 3.1 specification for our task tracker:

# openapi.yaml

openapi: 3.1.0

info:

title: Task Tracker API

description: A simple task tracking API demonstrating contract-first development

version: 1.0.0

contact:

name: API Support

email: api@example.com

servers:

- url: http://localhost:8000

description: Local development

paths:

/tasks:

post:

operationId: createTask

summary: Create a new task

description: Creates a new task and returns the created resource

tags:

- tasks

requestBody:

required: true

content:

application/json:

schema:

$ref: '#/components/schemas/TaskCreate'

examples:

basic:

summary: Basic task

value:

title: 'Review pull request'

description: 'Review the authentication refactor PR'

priority: 'high'

minimal:

summary: Minimal task

value:

title: 'Quick fix'

responses:

'201':

description: Task created successfully

content:

application/json:

schema:

$ref: '#/components/schemas/Task'

headers:

Location:

description: URL of the created task

schema:

type: string

format: uri

'400':

$ref: '#/components/responses/ValidationError'

'422':

$ref: '#/components/responses/UnprocessableEntity'

get:

operationId: listTasks

summary: List all tasks

description: Returns a paginated list of tasks with optional filtering

tags:

- tasks

parameters:

- name: status

in: query

description: Filter by task status

schema:

$ref: '#/components/schemas/TaskStatus'

- name: priority

in: query

description: Filter by priority level

schema:

$ref: '#/components/schemas/Priority'

- name: limit

in: query

description: Maximum number of tasks to return

schema:

type: integer

minimum: 1

maximum: 100

default: 20

- name: offset

in: query

description: Number of tasks to skip

schema:

type: integer

minimum: 0

default: 0

responses:

'200':

description: List of tasks

content:

application/json:

schema:

$ref: '#/components/schemas/TaskList'

/tasks/{task_id}:

parameters:

- name: task_id

in: path

required: true

description: Unique task identifier

schema:

type: string

format: uuid

get:

operationId: getTask

summary: Get a specific task

tags:

- tasks

responses:

'200':

description: Task details

content:

application/json:

schema:

$ref: '#/components/schemas/Task'

'404':

$ref: '#/components/responses/NotFound'

patch:

operationId: updateTask

summary: Update a task

description: Partially updates a task. Only provided fields are modified.

tags:

- tasks

requestBody:

required: true

content:

application/json:

schema:

$ref: '#/components/schemas/TaskUpdate'

responses:

'200':

description: Task updated successfully

content:

application/json:

schema:

$ref: '#/components/schemas/Task'

'400':

$ref: '#/components/responses/ValidationError'

'404':

$ref: '#/components/responses/NotFound'

components:

schemas:

Priority:

type: string

enum: [low, medium, high, critical]

description: Task priority level

TaskStatus:

type: string

enum: [pending, in_progress, completed, cancelled]

description: Current status of the task

TaskCreate:

type: object

required:

- title

properties:

title:

type: string

minLength: 1

maxLength: 200

description: Short description of the task

examples:

- 'Review pull request'

description:

type: string

maxLength: 2000

description: Detailed description of what needs to be done

priority:

$ref: '#/components/schemas/Priority'

default: medium

due_date:

type: string

format: date-time

description: When the task should be completed

TaskUpdate:

type: object

minProperties: 1

properties:

title:

type: string

minLength: 1

maxLength: 200

description:

type: string

maxLength: 2000

priority:

$ref: '#/components/schemas/Priority'

status:

$ref: '#/components/schemas/TaskStatus'

due_date:

type: string

format: date-time

Task:

type: object

required:

- id

- title

- status

- priority

- created_at

- updated_at

properties:

id:

type: string

format: uuid

description: Unique identifier for the task

title:

type: string

description:

type: string

nullable: true

priority:

$ref: '#/components/schemas/Priority'

status:

$ref: '#/components/schemas/TaskStatus'

due_date:

type: string

format: date-time

nullable: true

created_at:

type: string

format: date-time

description: When the task was created

updated_at:

type: string

format: date-time

description: When the task was last modified

TaskList:

type: object

required:

- items

- total

- limit

- offset

properties:

items:

type: array

items:

$ref: '#/components/schemas/Task'

total:

type: integer

minimum: 0

description: Total number of tasks matching the filter

limit:

type: integer

offset:

type: integer

Error:

type: object

required:

- code

- message

properties:

code:

type: string

description: Machine-readable error code

message:

type: string

description: Human-readable error message

details:

type: object

additionalProperties: true

description: Additional error context

responses:

ValidationError:

description: Request validation failed

content:

application/json:

schema:

$ref: '#/components/schemas/Error'

example:

code: 'VALIDATION_ERROR'

message: 'Request body failed validation'

details:

field: 'title'

reason: 'must be at least 1 character'

UnprocessableEntity:

description: Request was valid but could not be processed

content:

application/json:

schema:

$ref: '#/components/schemas/Error'

example:

code: 'UNPROCESSABLE_ENTITY'

message: 'Due date cannot be in the past'

NotFound:

description: Resource not found

content:

application/json:

schema:

$ref: '#/components/schemas/Error'

example:

code: 'NOT_FOUND'

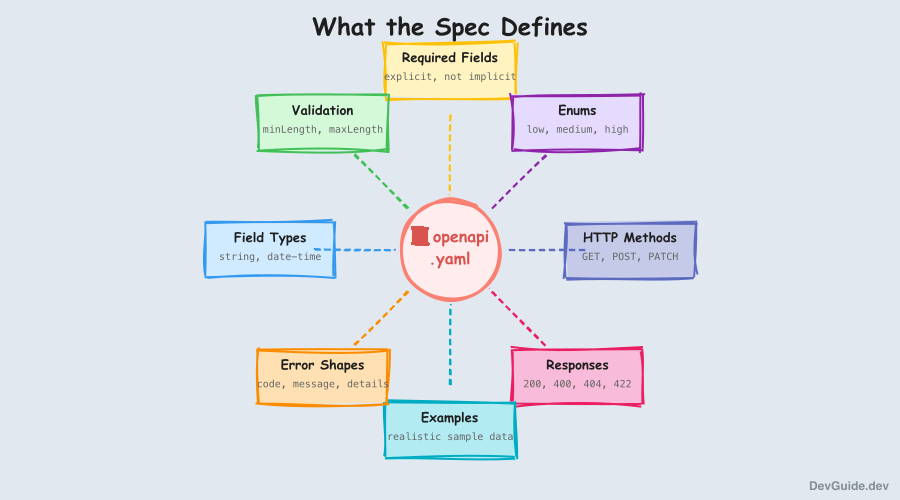

message: 'Task not found'This specification is dense, but notice what it defines:

- Exact field types and formats -

created_atisstringwithformat: date-time, not an ambiguous “timestamp” - Validation constraints -

titlehasminLength: 1andmaxLength: 200 - Required vs optional fields - explicitly declared, not implicit

- All possible responses - including error shapes with examples

- Enum values -

prioritycan only below,medium,high, orcritical

This is the contract. Every request must conform to it. Every response must conform to it. If they don’t, something is broken.

Step 2: Validate the Specification

Before implementing anything, we validate that our spec is correct. We’ll use Spectral, the industry-standard OpenAPI linter.

npm install -g @stoplight/spectral-cli

spectral lint openapi.yamlSpectral catches issues like:

- Missing operation IDs

- Inconsistent naming conventions

- Missing response schemas

- Security definition problems

For stricter validation, create a .spectral.yaml ruleset:

# .spectral.yaml

extends: ['spectral:oas']

rules:

operation-operationId: error

operation-description: warn

oas3-valid-schema-example: error

# Custom rule: all responses must have examples

response-must-have-example:

given: '$.paths.*.*.responses.*.content.application/json'

then:

field: 'example'

function: truthyRun with your custom rules:

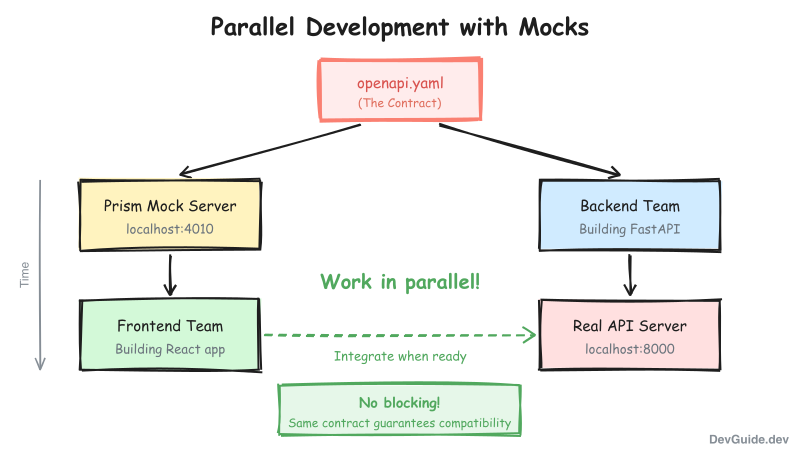

spectral lint openapi.yaml --ruleset .spectral.yamlStep 3: Mock the API Before Implementation

Here’s where contract-first pays its first dividend. Before writing any Python, we can run a fully functional mock server using Prism:

npm install -g @stoplight/prism-cli

prism mock openapi.yamlNow you have a working API at http://localhost:4010:

# Create a task - returns example from spec

curl -X POST http://localhost:4010/tasks \

-H "Content-Type: application/json" \

-d '{"title": "Test task"}'

# Returns 400 if validation fails

curl -X POST http://localhost:4010/tasks \

-H "Content-Type: application/json" \

-d '{"title": ""}' # Empty title violates minLength: 1The mock server:

- Validates all requests against the spec

- Returns example responses defined in the spec

- Returns proper error codes for invalid requests

Frontend teams can start building against this mock immediately. No waiting for backend implementation.

Step 4: Implement with Validation

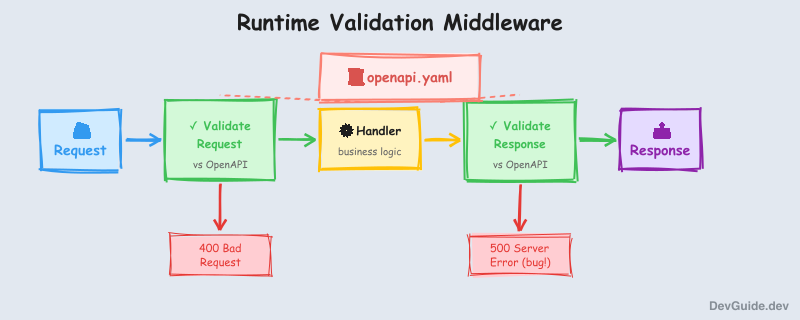

Now we implement the real API. The key architectural decision: validate every request and response against the spec at runtime.

We’ll use FastAPI with openapi-core for validation. Here’s the project structure:

task-tracker/

├── openapi.yaml # The contract (source of truth)

├── app/

│ ├── __init__.py

│ ├── main.py # FastAPI application

│ ├── validation.py # OpenAPI validation middleware

│ ├── models.py # Pydantic models (generated from spec)

│ ├── routes/

│ │ └── tasks.py # Task endpoints

│ └── storage.py # In-memory storage for demo

├── tests/

│ ├── test_contract.py # Contract compliance tests

│ └── test_tasks.py # Functional tests

├── scripts/

│ └── generate_client.py # Client SDK generation

├── Dockerfile

├── docker-compose.yaml

├── requirements.txt

└── MakefileThe Validation Middleware

This is the core enforcement mechanism. Every request and response passes through OpenAPI validation:

# app/validation.py

from typing import Callable

from pathlib import Path

from fastapi import Request, Response

from starlette.middleware.base import BaseHTTPMiddleware

from openapi_core import OpenAPI

from openapi_core.contrib.starlette import StarletteOpenAPIRequest, StarletteOpenAPIResponse

class OpenAPIValidationMiddleware(BaseHTTPMiddleware):

"""

Middleware that validates all requests and responses against the OpenAPI spec.

In development: raises exceptions on validation failure

In production: logs violations but allows requests through (configurable)

"""

def __init__(self, app, spec_path: Path, strict: bool = True):

super().__init__(app)

self.openapi = OpenAPI.from_file_path(spec_path)

self.strict = strict

async def dispatch(self, request: Request, call_next: Callable) -> Response:

# Validate request

openapi_request = StarletteOpenAPIRequest(request)

request_result = self.openapi.unmarshal_request(openapi_request)

if request_result.errors:

if self.strict:

error_messages = [str(e) for e in request_result.errors]

return Response(

content=json.dumps({

"code": "VALIDATION_ERROR",

"message": "Request validation failed",

"details": {"errors": error_messages}

}),

status_code=400,

media_type="application/json"

)

else:

# Log but continue in non-strict mode

logger.warning(f"Request validation errors: {request_result.errors}")

# Process the request

response = await call_next(request)

# Validate response

openapi_response = StarletteOpenAPIResponse(response)

response_result = self.openapi.unmarshal_response(

openapi_request, openapi_response

)

if response_result.errors:

# Response validation failures are always bugs in our code

logger.error(f"Response validation failed: {response_result.errors}")

if self.strict:

raise ContractViolationError(

f"Response does not match spec: {response_result.errors}"

)

return response

class ContractViolationError(Exception):

"""Raised when a response violates the API contract."""

passThe FastAPI Application

# app/main.py

from pathlib import Path

from fastapi import FastAPI

from fastapi.middleware.cors import CORSMiddleware

from app.validation import OpenAPIValidationMiddleware

from app.routes import tasks

SPEC_PATH = Path(__file__).parent.parent / "openapi.yaml"

app = FastAPI(

title="Task Tracker API",

description="Contract-first API demonstration",

version="1.0.0",

# Disable auto-generated docs - we serve our spec directly

openapi_url=None,

)

# Add OpenAPI validation middleware

app.add_middleware(

OpenAPIValidationMiddleware,

spec_path=SPEC_PATH,

strict=True, # Fail on any contract violation

)

app.add_middleware(

CORSMiddleware,

allow_origins=["*"],

allow_methods=["*"],

allow_headers=["*"],

)

# Include routes

app.include_router(tasks.router)

# Serve the OpenAPI spec directly

@app.get("/openapi.yaml", include_in_schema=False)

async def get_openapi_spec():

return FileResponse(SPEC_PATH, media_type="application/yaml")The Task Routes

# app/routes/tasks.py

from datetime import datetime, timezone

from typing import Optional

from uuid import uuid4

from fastapi import APIRouter, HTTPException, Query, Response

from pydantic import BaseModel, Field

from app.storage import task_storage

router = APIRouter(prefix="/tasks", tags=["tasks"])

# Pydantic models matching the OpenAPI spec exactly

class TaskCreate(BaseModel):

title: str = Field(..., min_length=1, max_length=200)

description: Optional[str] = Field(None, max_length=2000)

priority: str = Field("medium", pattern="^(low|medium|high|critical)$")

due_date: Optional[datetime] = None

class TaskUpdate(BaseModel):

title: Optional[str] = Field(None, min_length=1, max_length=200)

description: Optional[str] = Field(None, max_length=2000)

priority: Optional[str] = Field(None, pattern="^(low|medium|high|critical)$")

status: Optional[str] = Field(None, pattern="^(pending|in_progress|completed|cancelled)$")

due_date: Optional[datetime] = None

class Task(BaseModel):

id: str

title: str

description: Optional[str]

priority: str

status: str

due_date: Optional[datetime]

created_at: datetime

updated_at: datetime

class TaskList(BaseModel):

items: list[Task]

total: int

limit: int

offset: int

@router.post("", status_code=201)

async def create_task(task_data: TaskCreate, response: Response) -> Task:

"""Create a new task."""

now = datetime.now(timezone.utc)

task = Task(

id=str(uuid4()),

title=task_data.title,

description=task_data.description,

priority=task_data.priority,

status="pending",

due_date=task_data.due_date,

created_at=now,

updated_at=now,

)

task_storage.save(task)

response.headers["Location"] = f"/tasks/{task.id}"

return task

@router.get("")

async def list_tasks(

status: Optional[str] = Query(None, pattern="^(pending|in_progress|completed|cancelled)$"),

priority: Optional[str] = Query(None, pattern="^(low|medium|high|critical)$"),

limit: int = Query(20, ge=1, le=100),

offset: int = Query(0, ge=0),

) -> TaskList:

"""List all tasks with optional filtering."""

tasks = task_storage.list_all()

# Apply filters

if status:

tasks = [t for t in tasks if t.status == status]

if priority:

tasks = [t for t in tasks if t.priority == priority]

total = len(tasks)

# Apply pagination

tasks = tasks[offset:offset + limit]

return TaskList(items=tasks, total=total, limit=limit, offset=offset)

@router.get("/{task_id}")

async def get_task(task_id: str) -> Task:

"""Get a specific task by ID."""

task = task_storage.get(task_id)

if not task:

raise HTTPException(

status_code=404,

detail={"code": "NOT_FOUND", "message": "Task not found"}

)

return task

@router.patch("/{task_id}")

async def update_task(task_id: str, update_data: TaskUpdate) -> Task:

"""Partially update a task."""

task = task_storage.get(task_id)

if not task:

raise HTTPException(

status_code=404,

detail={"code": "NOT_FOUND", "message": "Task not found"}

)

# Apply updates

update_dict = update_data.model_dump(exclude_unset=True)

if not update_dict:

raise HTTPException(

status_code=400,

detail={

"code": "VALIDATION_ERROR",

"message": "At least one field must be provided"

}

)

for field, value in update_dict.items():

setattr(task, field, value)

task.updated_at = datetime.now(timezone.utc)

task_storage.save(task)

return taskSimple In-Memory Storage

# app/storage.py

from typing import Optional

class TaskStorage:

"""Simple in-memory storage for demonstration."""

def __init__(self):

self._tasks: dict[str, "Task"] = {}

def save(self, task: "Task") -> None:

self._tasks[task.id] = task

def get(self, task_id: str) -> Optional["Task"]:

return self._tasks.get(task_id)

def list_all(self) -> list["Task"]:

return list(self._tasks.values())

def delete(self, task_id: str) -> bool:

if task_id in self._tasks:

del self._tasks[task_id]

return True

return False

task_storage = TaskStorage()Step 5: Enforce the Contract in CI

The spec is only as useful as its enforcement. We add two layers of CI validation:

Contract Compliance Tests

These tests verify that our implementation matches the spec:

# tests/test_contract.py

"""

Contract compliance tests.

These tests verify that the API implementation conforms to the OpenAPI specification.

They catch drift between spec and implementation.

"""

import pytest

from pathlib import Path

from fastapi.testclient import TestClient

from openapi_core import OpenAPI

from openapi_core.contrib.requests import RequestsOpenAPIRequest, RequestsOpenAPIResponse

from app.main import app

SPEC_PATH = Path(__file__).parent.parent / "openapi.yaml"

@pytest.fixture

def client():

return TestClient(app)

@pytest.fixture

def openapi():

return OpenAPI.from_file_path(SPEC_PATH)

class TestContractCompliance:

"""Verify all endpoints conform to the OpenAPI specification."""

def test_create_task_response_matches_spec(self, client, openapi):

"""POST /tasks response must match Task schema."""

response = client.post(

"/tasks",

json={"title": "Test task", "priority": "high"}

)

assert response.status_code == 201

# Validate response against spec

result = openapi.unmarshal_response(

RequestsOpenAPIRequest(response.request),

RequestsOpenAPIResponse(response)

)

assert not result.errors, f"Response validation errors: {result.errors}"

def test_create_task_validates_request(self, client):

"""POST /tasks must reject invalid requests per spec."""

# Empty title violates minLength: 1

response = client.post("/tasks", json={"title": ""})

assert response.status_code == 400

# Missing title violates required

response = client.post("/tasks", json={"priority": "high"})

assert response.status_code == 400

# Invalid priority violates enum

response = client.post("/tasks", json={"title": "Test", "priority": "urgent"})

assert response.status_code == 400

def test_list_tasks_response_matches_spec(self, client, openapi):

"""GET /tasks response must match TaskList schema."""

# Create a task first

client.post("/tasks", json={"title": "Test"})

response = client.get("/tasks")

assert response.status_code == 200

result = openapi.unmarshal_response(

RequestsOpenAPIRequest(response.request),

RequestsOpenAPIResponse(response)

)

assert not result.errors

# Verify structure

data = response.json()

assert "items" in data

assert "total" in data

assert "limit" in data

assert "offset" in data

def test_get_task_not_found_matches_spec(self, client, openapi):

"""GET /tasks/{id} 404 must match Error schema."""

response = client.get("/tasks/00000000-0000-0000-0000-000000000000")

assert response.status_code == 404

result = openapi.unmarshal_response(

RequestsOpenAPIRequest(response.request),

RequestsOpenAPIResponse(response)

)

assert not result.errors

def test_update_task_partial_update(self, client, openapi):

"""PATCH /tasks/{id} must accept partial updates per spec."""

# Create task

create_response = client.post(

"/tasks",

json={"title": "Original", "priority": "low"}

)

task_id = create_response.json()["id"]

# Partial update - only status

response = client.patch(

f"/tasks/{task_id}",

json={"status": "in_progress"}

)

assert response.status_code == 200

result = openapi.unmarshal_response(

RequestsOpenAPIRequest(response.request),

RequestsOpenAPIResponse(response)

)

assert not result.errors

# Verify partial update worked

data = response.json()

assert data["title"] == "Original" # Unchanged

assert data["status"] == "in_progress" # Updated

class TestDateTimeFormat:

"""Verify datetime fields match the spec format."""

def test_created_at_is_iso_format(self, client):

"""created_at must be ISO 8601 datetime string."""

response = client.post("/tasks", json={"title": "Test"})

data = response.json()

# Must be parseable as ISO datetime

from datetime import datetime

created = datetime.fromisoformat(data["created_at"].replace("Z", "+00:00"))

assert created is not None

def test_updated_at_changes_on_patch(self, client):

"""updated_at must change when task is modified."""

import time

# Create

response = client.post("/tasks", json={"title": "Test"})

original_updated = response.json()["updated_at"]

task_id = response.json()["id"]

time.sleep(0.01) # Ensure time difference

# Update

response = client.patch(f"/tasks/{task_id}", json={"title": "Updated"})

new_updated = response.json()["updated_at"]

assert new_updated > original_updatedBreaking Change Detection

Add this to your CI pipeline to catch breaking changes:

# .github/workflows/api-contract.yaml

name: API Contract Validation

on:

pull_request:

paths:

- 'openapi.yaml'

- 'app/**'

jobs:

lint-spec:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v4

- name: Lint OpenAPI spec

uses: stoplightio/spectral-action@v0.8.10

with:

file_glob: 'openapi.yaml'

check-breaking-changes:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v4

with:

fetch-depth: 0

- name: Check for breaking changes

uses: oasdiff/oasdiff-action/breaking@main

with:

base: 'origin/main:openapi.yaml'

revision: 'openapi.yaml'

fail-on-diff: true

contract-tests:

runs-on: ubuntu-latest

steps:

- uses: actions/checkout@v4

- name: Set up Python

uses: actions/setup-python@v5

with:

python-version: '3.12'

- name: Install dependencies

run: pip install -r requirements.txt

- name: Run contract tests

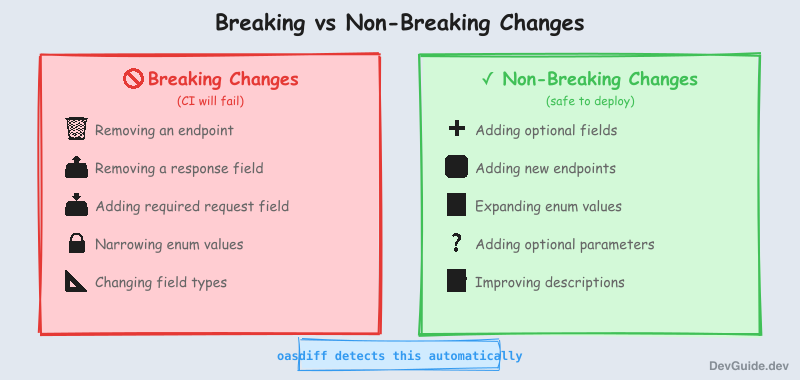

run: pytest tests/test_contract.py -vThe oasdiff check is particularly powerful - it knows the difference between breaking and non-breaking changes:

Breaking changes (will fail CI):

- Removing an endpoint

- Removing a required response field

- Adding a required request field

- Narrowing an enum’s allowed values

Non-breaking changes (allowed):

- Adding optional fields

- Adding new endpoints

- Expanding enum values

- Adding optional query parameters

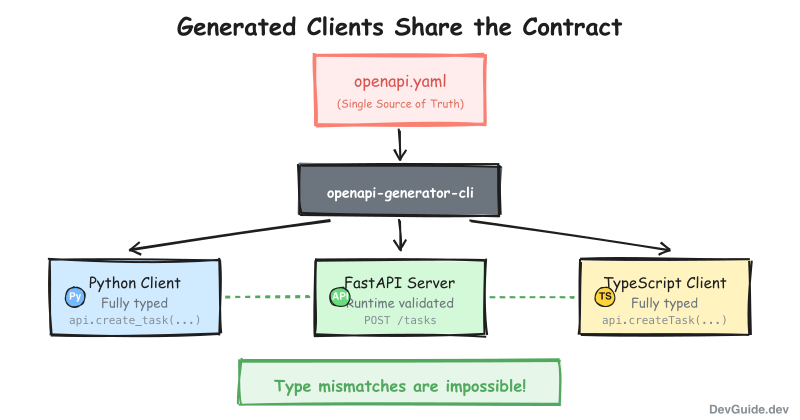

Step 6: Generate Client SDKs

The final piece: generate type-safe client code from the spec. This ensures clients and servers always agree on the contract.

# Install OpenAPI Generator

npm install -g @openapitools/openapi-generator-cli

# Generate Python client

openapi-generator-cli generate \

-i openapi.yaml \

-g python \

-o ./generated/python-client \

--additional-properties=packageName=task_tracker_client

# Generate TypeScript client

openapi-generator-cli generate \

-i openapi.yaml \

-g typescript-fetch \

-o ./generated/ts-clientThe generated Python client:

# Using the generated client

from task_tracker_client import ApiClient, Configuration, TasksApi

from task_tracker_client.models import TaskCreate

config = Configuration(host="http://localhost:8000")

client = ApiClient(config)

api = TasksApi(client)

# Create task - fully typed

task = api.create_task(TaskCreate(

title="Review PR",

priority="high"

))

print(f"Created: {task.id}")

print(f"Status: {task.status}") # IDE autocomplete works

# List with filters

tasks = api.list_tasks(status="pending", limit=10)

for t in tasks.items:

print(f"- {t.title} ({t.priority})")The client is generated from the same spec the server validates against. Type mismatches are impossible.

The Complete Repository

Here’s the full project setup for you to clone and run:

# Clone and setup

git clone https://github.com/devguide-dev/contract-first-task-tracker

cd contract-first-task-tracker

python -m venv venv

source venv/bin/activate

pip install -r requirements.txt

# Run the API

make run

# API available at http://localhost:8000

# Run tests

make test

# Validate spec

make lint

# Generate clients

make generate-clientsrequirements.txt

fastapi>=0.109.0

uvicorn>=0.27.0

pydantic>=2.5.0

openapi-core>=0.19.0

httpx>=0.26.0

pytest>=8.0.0

pytest-asyncio>=0.23.0Makefile

.PHONY: run test lint mock generate-clients

run:

uvicorn app.main:app --reload --host 0.0.0.0 --port 8000

test:

pytest tests/ -v

lint:

spectral lint openapi.yaml

mock:

prism mock openapi.yaml --port 4010

generate-clients:

openapi-generator-cli generate -i openapi.yaml -g python -o generated/python-client

openapi-generator-cli generate -i openapi.yaml -g typescript-fetch -o generated/ts-client

validate-breaking:

oasdiff breaking origin/main:openapi.yaml openapi.yamlDockerfile

FROM python:3.12-slim

WORKDIR /app

COPY requirements.txt .

RUN pip install --no-cache-dir -r requirements.txt

COPY openapi.yaml .

COPY app/ app/

EXPOSE 8000

CMD ["uvicorn", "app.main:app", "--host", "0.0.0.0", "--port", "8000"]docker-compose.yaml

version: '3.8'

services:

api:

build: .

ports:

- '8000:8000'

volumes:

- ./openapi.yaml:/app/openapi.yaml:ro

- ./app:/app/app:ro

environment:

- STRICT_VALIDATION=true

mock:

image: stoplight/prism:4

command: mock /app/openapi.yaml --host 0.0.0.0

ports:

- '4010:4010'

volumes:

- ./openapi.yaml:/app/openapi.yaml:roWhen Contract-First Doesn’t Fit

This approach has real costs:

Upfront design time: You can’t just start coding. You need to think through the API design before implementation. For exploratory prototypes, this slows you down.

Spec maintenance: The spec is now critical infrastructure. It needs reviews, versioning, and careful change management.

Tooling complexity: You’re adding linters, validators, generators, and mock servers to your stack. Each is a dependency to maintain.

Learning curve: Your team needs to understand OpenAPI syntax, validation semantics, and the tooling ecosystem.

Contract-first works best when:

- Multiple teams consume your API

- API stability matters (breaking changes are expensive)

- You’re building public/partner APIs

- Frontend and backend teams work in parallel

- You have compliance requirements around API documentation

It’s probably overkill for:

- Internal microservices with one consumer

- Rapid prototypes where the API is still being discovered

- Teams of one where you’re writing both client and server

- GraphQL APIs (which have their own schema-first approach)

The Executable Contract Mindset

The spec-as-documentation mindset treats OpenAPI like a README - nice to have, maintained when convenient, trusted cautiously.

The spec-as-executable-contract mindset treats it like code:

- It’s tested

- It’s validated in CI

- Breaking changes are caught automatically

- Violations fail the build

- Clients are generated from it, not written by hand

When your spec is an executable contract, the question “does the implementation match the documentation?” becomes meaningless. They’re the same thing. The spec IS the implementation’s interface, enforced at runtime and compile time.

The API documentation said created_at was an ISO timestamp. The server returned an ISO timestamp. Because it had no choice.

The complete working repository is available at github.com/devguide-dev/contract-first-task-tracker.